If you’re still asking whether AI is strategic, you’re already running last year’s company. By 2024, global private AI investment hit roughly $252 billion, more than 13x what it was a decade ago. Generative AI alone pulled in about $34 billion, over a fifth of all AI private investment. In parallel, between 78% and 88% of organizations now say they use AI in at least one business function, and 71–79% report using generative AI.

On the ground, spending is no longer theoretical. One dataset of 45k+ US businesses shows paid AI adoption rising from 5% in early 2023 to 43.8% by late 2025, with contract values jumping from $39k to $530k and on track for ~$1M in 2026.

Put simply: AI is infrastructure now. The question for boards is no longer “Should we do AI?” but “How far behind are we, and how fast can we catch up?”

Below are 15 hard, evidence‑based truths drawn from the State of AI Report 2025, Stanford’s AI Index 2025, and McKinsey’s The State of AI in 2025—and what they imply for how executives, boards, and organizations should navigate 2026 and beyond.

1. AI is now infrastructure. Opting out is no longer a neutral choice.

Stanford’s AI Index shows AI business usage jumped from 55% in 2023 to 78% in 2024, and gen‑AI usage more than doubled from 33% to 71% in just one year. McKinsey’s 2025 survey finds an even higher level: 88% of organizations regularly use AI in at least one function, and 79% use gen‑AI.

The State of AI 2025 zooms in on real spend: paid AI adoption among US businesses in Ramp’s dataset rose from 5% (Jan ’23) to 43.8% (Sept ’25), with 12‑month retention improving from ~50% to 80% and average contract values rising more than 10x.

What this means for leaders

If your answer to “Do we use AI?” is anything other than “yes, across multiple functions,” you’re not cautious—you’re behind baseline market behavior.

The competitive question is now: Where will you be better than the default? If your AI posture is just “we bought copilots,” you’ve matched the average, not gained an edge.

2. The capability frontier is racing forward—but reasoning is still fragile.

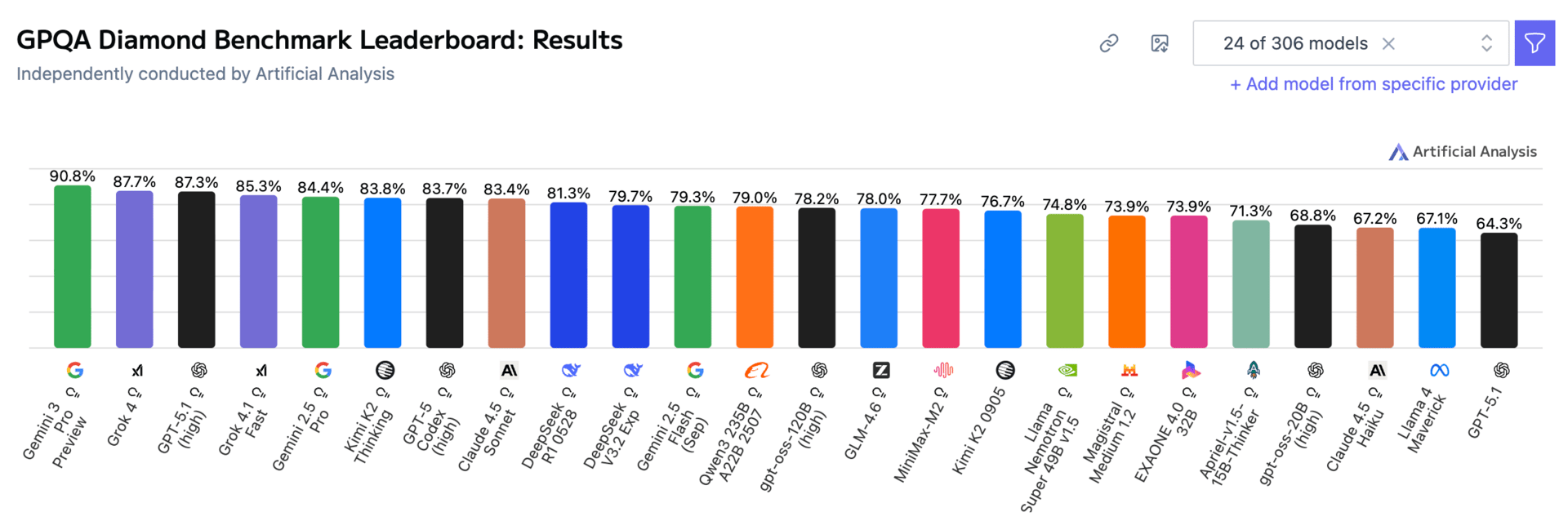

On the headline benchmarks, progress is brutal. In just one year, top model scores rose by 18.8 percentage points on MMMU, 48.9 points on GPQA, and 67.3 points on SWE‑bench, which tests real‑world code base modifications.

OpenAI’s “reasoning” models (o1, o3) show what’s possible: o1 scores 74.4% on an International Mathematical Olympiad qualifier versus 9.3% for GPT‑4o, using test‑time reasoning that iterates through solutions. But that performance is almost six times more expensive and 30x slower than GPT‑4o.

Even so, Stanford is blunt: complex reasoning remains unsolved. Models still can’t reliably handle tasks where a formal solution exists—like multi‑step arithmetic, planning, or larger‑scale logical problems—especially when they differ from the training distribution.

What this means for leaders

Don’t confuse benchmark wins with trustworthiness. For high‑stakes use cases (finance, safety, legal, medical), design human‑in‑the‑loop workflows by default.

Be skeptical of vendors promising “autonomous reasoning” on open‑ended tasks. Demand task‑specific evaluation on your data, not generic benchmark slides.

3. Intelligence‑per‑dollar is compounding. Your cost curves are out of date every planning cycle.

The AI Index tracks a 280x reduction in the cost of GPT‑3.5‑level inference—from about $20 to $0.07 per million tokens between 2020 and 2024. It also shows the cost to run a demanding benchmark (GPQA Diamond) dropping from $15 to about $0.12 over the same period.

On the model side, the smallest system scoring above 60% on MMLU went from PaLM (540B parameters) in 2022 to Phi‑3‑mini (3.8B parameters) in 2024—a 142x reduction in model size for similar performance.

The State of AI report goes further: in 2025, GPT‑5 is estimated to deliver around 12x better cost‑performance than a leading competitor on key benchmarks, while capability‑per‑dollar for major labs improves on 3–8 month doubling cycles.

What this means for leaders

Assume AI unit economics will improve every 6–18 months. Multi‑year contracts priced on today’s cost curves are inherently risky.

Push finance and procurement to treat AI like cloud compute: tiered commitments, renegotiation windows, and optionality, not inflexible multi‑year “AI platform” licenses locked at 2025 prices.

4. Power, chips, and geography—not algorithms—are the real hard constraints.

The frontier is no longer bottlenecked by clever architectures; it’s bottlenecked by iron and electricity.

The State of AI 2025 shows the US controlling about 75% of global AI supercomputer capacity—around 850,000 H100‑equivalent GPUs, compared to China’s 110,000. At the same time, 80% of this capacity is now in private hands, up from 40% in 2019, and the leading AI supercomputer by 2030 could require 2 million chips, 9GW of power, and $200 billion in capex—roughly nine nuclear reactors’ worth of capacity.

Anthropic and peers expect frontier training to require 5GW data centers by 2028, with multiple ~1GW clusters coming online around 2026.

Power is the choke point. SemiAnalysis estimates a 68GW US power shortfall by 2028 if projected AI data center demand materializes; the US Department of Energy warns blackouts could become 100x more frequent by 2030, and one analysis projects up to 40% increases in residential rates.

Meanwhile, the Gulf is turning AI into an energy‑backed industrial policy. The US–UAE AI Acceleration Partnership commits $1.4 trillion over 10 years, including a 5GW “Stargate UAE” cluster and up to 500k Blackwell GPUs. Separate US–Saudi deals total about $600 billion, including funding for US AI data centers and a 1GW AI data center in France backed by UAE capital.

What this means for leaders

If AI is central to your strategy, energy and location strategy are board‑level issues, not just facilities problems. Think PPAs, co‑location with power, and strategic cloud partnerships.

Expect regional AI disparities. Access to cheap, reliable power and chips will increasingly dictate where you can run the most demanding workloads.

5. The AI race is structurally bipolar: closed US labs vs a surging Chinese open‑weight ecosystem.

Stanford’s AI Index finds US‑based institutions released 40 notable models in 2024, versus 15 from China and 3 from Europe. The US still leads in model count and investment, but China dominates publications and holds roughly 70% of global AI patents, and its models have largely closed the performance gap on major benchmarks like MMLU and HumanEval.

The State of AI 2025 reframes this as a two‑pole system:

US pole – OpenAI, Anthropic, Google DeepMind, xAI and others pushing closed frontier models tightly bound to their own clouds and proprietary infrastructure.

China pole – labs like DeepSeek shipping increasingly competitive open‑weight models and reasoning systems, backed by the state’s AI‑Plus plan to embed AI across every sector by 2035, along with new regulations on AI content labeling and cybersecurity standards.

What this means for leaders

Your model and infra choices are now geopolitical. Chinese‑origin models or infra may trigger regulatory, reputational or procurement friction in the US/EU—and vice versa.

Build a multi‑model strategy: at least two frontier providers plus one strong open‑weight path, with clear criteria for what runs where based on sensitivity, performance and jurisdiction.

6. AI‑first companies are already tens of billions in revenue and resetting productivity norms.

The State of AI report tracks a leading cohort of 16 AI‑first companies generating $18.5B in annualized revenue as of August 2025. A “Lean AI Leaderboard” of 44 companies (each >$5M ARR, <50 FTE, <5 years old) collectively produces >$4B in revenue with >$2.5M revenue per employee and ~22 employees on average.

The growth dynamics are just as stark:

The 100 fastest‑growing AI companies on Stripe reach $5M ARR 1.5x faster than the top SaaS companies did in 2018.

AI firms in both the $1–20M and $20M+ revenue bands have been growing revenue at about 1.5x the pace of peers since late 2023.

Specialized generative media players—ElevenLabs, Synthesia, Black Forest Labs—are already well into hundreds of millions of ARR, often doubling revenue in under a year, continuing to grow with lean teams and high gross margins.

What this means for leaders

Revenue per employee of $1–3M is becoming “normal” for the best AI‑first firms. If you sit at $150–300k per employee, that’s not just a productivity gap; it’s a structural disadvantage.

Assume a material share of future profit pools in your category will be captured by tiny, deeply AI‑leveraged teams. Your strategy has to assume these players exist, even if they’re not visible in your market yet.

7. Enterprise adoption is broad—but most organizations are stuck in pilot mode.

The AI Index finds that by 2024, 78% of organizations used AI and 71% used gen‑AI in at least one function. But most report cost savings under 10% and revenue gains under 5% per function.

McKinsey’s survey is even clearer:

88% of organizations regularly use AI.

79% have adopted gen‑AI.

Yet only around one‑third say they are scaling AI, and just 7% report AI is fully deployed and integrated across the enterprise.

State of AI’s commercial data tells the same story from the vendor side: paid AI adoption and contract sizes are soaring, but much of that usage still sits in isolated tools and pilots, not re‑engineered core processes.

What this means for leaders

Stop counting “number of pilots” as success. Ask: In which end‑to‑end workflows is AI now just “how we work”?

Your job in 2025–26 is to move from project portfolios to a platform + product model: shared data, models and tooling, with dedicated product teams for key AI use cases.

8. A small group of AI high performers is quietly pulling away.

McKinsey defines “AI high performers” as organizations where >5% of EBIT is attributable to AI and respondents report “significant” value from AI. Only about 6% of companies in the survey qualify.

These high performers look nothing like the median:

They are 3.6x more likely than others to say they’ll use AI for transformative change, not just incremental improvements.

They consistently set growth and innovation objectives on top of efficiency—roughly 80% of them do so, versus about 50% of other firms.

They scale AI faster, use it across more functions, invest more in AI capabilities and push into agentic systems earlier.

What this means for leaders

Benchmarking against “average AI users” is a trap. Your future competitors look a lot more like this 6% cohort.

Internally, treat AI like digital transformation 2.0: top‑down ambition, explicit P&L ownership, and willingness to redesign org structures and workflows—not a scattered tools play.

9. Agents are real—but brittle. Treat them as products, not magic.

The narrative: “Agents will run your business.” The data: not yet.

McKinsey finds:

62% of organizations are experimenting with AI agents.

23% are scaling at least one agentic system somewhere in the enterprise.

But in any given function, no more than 10% of organizations report scaling agents.

Usage clusters in IT, knowledge management and service desks, and is most advanced in tech, media/telecom, and healthcare.

Stanford’s AI Index adds nuance: new benchmarks like RE‑Bench show AI agents can outperform human experts on short, two‑hour tasks, sometimes by a factor of four, but humans regain the lead over longer 32‑hour tasks. Agents are good at fast sprints, not yet at extended, open‑ended work.

On the flip side, State of AI documents that threat actors already use agents to automate cyberattacks, bundling open models into malware that hijacks developer tools and hunts for credentials and sensitive data.

What this means for leaders

Use 2025–26 to build muscle for designing and governing agents, not to plaster “agent platforms” across the enterprise. Start with a few high‑value, constrained workflows (IT support, internal research, customer ops) with clear SLAs and kill‑switches.

Treat agents as products with owners, security reviews and lifecycle management—not as invisible “automation glue” grafted onto every process.

10. AI is becoming a co‑scientist in R&D—especially in healthcare and materials.

AI has quietly moved from “fancy calculator” to core research instrument.

By 2023, the US FDA had approved 223 AI‑enabled medical devices, up from just six in 2015. Waymo was delivering 150,000+ fully autonomous rides per week, and Baidu’s Apollo Go robotaxis were operating across multiple Chinese cities—evidence of AI embedded in real‑world systems, not just prototypes.

Image Credits:Baidu

The AI Index highlights AI’s growing role in drug discovery, materials design, protein structure prediction and scientific coding, with foundation models increasingly fine‑tuned for scientific domains.

State of AI extends this to the physical world: nearly $2B raised in 2025 by 155 humanoid robotics companies, with eight unicorns and early commercial deployments in logistics and manufacturing.

What this means for leaders

If you’re in pharma, industrials, energy, automotive, or materials, you should be asking, “Where is AI in our R&D pipeline today—and where should it be?”

Competitive advantage will come from AI‑native experimentation pipelines: integrated lab automation, simulation, model‑guided design, and data infrastructure tuned to feed foundation models.

11. Productivity gains are real—but they reshape the workforce more than they simply shrink it.

Randomized trials summarized in the AI Index show a consistent pattern: AI tools boost output and quality, especially for lower‑skill workers, narrowing performance gaps by 10–30 percentage points in writing, coding, and customer support tasks.

McKinsey sees early labor impacts:

17% of organizations report AI drove workforce reductions in at least one function over the past year.

About 32% expect AI to reduce their workforce by more than 3% over the next three years, while 13% expect net increases. Larger companies and high performers expect bigger shifts in both directions.

At the same time, AI‑related roles and skills are spreading across industries, with clear wage premiums for AI‑proficient workers and growing demand for hybrid roles (e.g., product managers, marketers, and lawyers who can work effectively with AI).

What this means for leaders

Treat AI as a reallocation engine, not just a cost‑cutting tool. The organizations that win will be those that move people into higher‑value, AI‑augmented roles faster than competitors, not those that only chase headcount reduction.

You need a five‑year workforce plan: which roles shrink, which grow, what new hybrid profiles you need, and how you’ll reskill at scale.

12. Risk, misuse, and safety debt are compounding faster than your controls.

The AI Incident Database recorded 233 AI‑related incidents in 2024, a 56% increase over 2023. Cases range from wrongful arrests due to flawed facial recognition to deepfake harassment and chatbots contributing to self‑harm.

On the enterprise side, AI Index data (drawing on McKinsey’s survey) shows cybersecurity (66%), regulatory compliance (63%), and privacy (60%) as the top AI risks leaders consider relevant—but far fewer organizations are actively mitigating them. The gap is especially large for IP infringement and reputational risk.

State of AI 2025 adds a sobering structural fact: 11 leading US AI safety‑science organizations combined will spend about $133.4M in 2025, while major labs individually spend more than that in a single day. That leaves the ecosystem heavily reliant on internal safety teams that ultimately answer to the same commercial incentives as product and growth.

What this means for leaders

You cannot outsource AI risk to vendors and regulators. Build a formal AI risk register, covering misuse, model failure, data leakage, cyber‑attack surfaces, and regulatory exposure.

Stand up AI incident response playbooks now: who investigates, who shuts systems down, how you notify affected parties, how you remediate. Assume an AI incident will hit your organization in the next 12–24 months.

13. Governance and regulation are shifting from principles to enforcement—with teeth.

AI governance isn’t a whitepaper exercise anymore.

The AI Index reports that US state‑level AI laws exploded from 49 cumulatively in 2023 to 131 in 2024, while AI‑related federal regulations more than doubled from 25 to 59 in a single year, spanning 42 agencies. Mentions of AI in legislative proceedings across 75 countries grew 21.3% year‑on‑year and have increased more than ninefold since 2016.

Governments are simultaneously funding AI infrastructure at scale: Canada’s $2.4B package, China’s $47.5B semiconductor fund, France’s €109B commitment, India’s $1.25B pledge, and Saudi Arabia’s $100B Project Transcendence.

China’s new AI content labeling rules, three national cybersecurity standards for datasets and gen‑AI services, and its AI‑Plus industrial policy aim for full AI penetration across sectors by 2035. Meanwhile, the US is leaning into an “America‑first AI” stance and tightening export controls on chips and semiconductor equipment.

What this means for leaders

Treat policy risk as a core dimension of AI strategy. You need a clear map of where your models run, where your data lives, and which jurisdictions’ rules you’re exposed to.

Pull legal, compliance, and policy into the design phase of major AI initiatives. Retrofitting compliance onto live systems will be slow, expensive, and sometimes impossible.

14. The ecosystem is fragmenting. Multi‑model, open‑weight, and on‑prem are strategic choices, not IT plumbing.

Top models are no longer only gigantic, closed US systems. Stanford shows the performance gap between US and Chinese models on MMLU and HumanEval shrinking from double digits to near parity by 2024, even as the US continues to dominate investment and model count.

At the same time, smaller models are getting very good: Microsoft’s Phi‑3‑mini (3.8B parameters) matches or exceeds the performance threshold once reserved for 540B‑parameter models like PaLM.

The State of AI report highlights China’s DeepSeek and other open‑weight labs training competitive models with headline training costs like “$5M frontier model,” while the Chinese government pushes open source communities as a strategic export. US labs, by contrast, continue to tie the highest‑end capabilities to their own proprietary clouds and hardware.

McKinsey and the AI Index both show enterprises increasingly using multiple models across functions—some via SaaS, some via APIs, some embedded in internal systems.

What this means for leaders

You need an explicit AI architecture strategy: which workloads justify paying for frontier closed models, where smaller or open‑weight systems are sufficient, and when you need on‑prem or VPC deployments for data sensitivity.

Treat model choice as a governance decision, not just a developer preference. Your board should understand the trade‑offs between lock‑in, performance, cost, sovereignty, and risk.

15. The next edge is an AI‑native operating model—not a better chatbot.

Across all three reports, the organizations that look healthiest for 2026–2030 are doing five things in common:

They put AI directly on the P&L.

They concentrate on functions where data show the most value: McKinsey and the AI Index see the strongest cost savings in software engineering, IT, service operations and supply chain, and revenue uplift in marketing & sales, strategy/corporate finance, and product development. If your roadmap doesn’t overweight these areas, you’re walking past the obvious money.

They design for scale from day one.

They build shared data and model platforms, invest in standardized evaluation and monitoring, and centralize security and governance. Stanford’s work on benchmark saturation and the lack of standardized safety evaluations underscores the need for internal eval stacks, not just vendor marketing decks.

They invest as heavily in people and process as in models.

McKinsey’s high performers redesign workflows, retrain staff, and set explicit growth and innovation goals alongside efficiency. They don’t assume tools alone will transform the business.

They understand the geopolitics and infrastructure.

They track where their compute lives, how reliant they are on US‑centric hyperscalers vs Chinese hardware/software ecosystems, and what rising power constraints or export controls could do to their roadmaps.

They close the safety and governance gap deliberately.

They don’t wait for regulators or labs to save them. They align product, security, legal, and risk around AI‑specific controls and incident response, recognizing that incidents and misuse are climbing faster than external safeguards.

If you’re in the boardroom in 2025…

The signal from these three reports is brutally consistent:

AI is already everywhere—in your employees’ workflows, your vendors’ tools, your competitors’ products.

The gap between average adopters and high performers is widening, not shrinking.

The constraints have moved from algorithms to power, chips, and geopolitics.

And the risks are scaling faster than the controls most companies have in place.

Your job is no longer to green‑light “some AI initiatives.” Your job is to decide what kind of AI organization you’re going to be—and then drive strategy, capital allocation, talent, governance, and infrastructure accordingly.

Everything else is just waiting for the future to happen to you.

Author’s note: This article synthesizes and interprets insights from three flagship 2025 AI reports—Stanford’s AI Index 2025, McKinsey’s The State of AI in 2025: Agents, Innovation, and Transformation, and the State of AI Report 2025 by Air Street Capital—to provide an integrated, evidence‑based view of the AI landscape for executives, boards, and organizational leaders.

📩 Reach out to us at [email protected] or book a discovery call to explore partnerships.

About the Authors

Sam Obeidat is a senior AI strategist, venture builder, and product leader with over 15 years of global experience. He has led AI transformations across 40+ organizations in 12+ sectors, including defense, aerospace, finance, healthcare, and government. As President of World AI X, a global corporate venture studio, Sam works with top executives and domain experts to co-develop high-impact AI use cases, validate them with host partners, and pilot them with investor backing—turning bold ideas into scalable ventures. Under his leadership, World AI X has launched ventures now valued at over $100 million, spanning sectors like defense tech, hedge funds, and education. Sam combines deep technical fluency with real-world execution. He’s built enterprise-grade AI systems from the ground up and developed proprietary frameworks that trigger KPIs, reduce costs, unlock revenue, and turn traditional organizations into AI-native leaders. He’s also the host of the Chief AI Officer (CAIO) Program, an executive training initiative empowering leaders to drive responsible AI transformation at scale.

Sponsored by World AI X

The CAIO Program

Preparing Executives to Shape the Future of their Industries and Organizations

World AI X is excited to extend a special invitation for executives and visionary leaders to join our Chief AI Officer (CAIO) program! This is a unique opportunity to become a future AI leader or a CAIO in your field.

During a transformative, live 6-week journey, you'll participate in a hands-on simulation to develop a detailed AI strategy or project plan tailored to a specific use case of your choice. You'll receive personalized training and coaching from the top industry experts who have successfully led AI transformations in your field. They will guide you through the process and share valuable insights to help you achieve success.

By enrolling in the program, candidates can attend any of the upcoming cohorts over the next 12 months, allowing multiple opportunities for learning and growth.

We’d love to help you take this next step in your career.

About The AI CAIO Hub - by World AI X

The CAIO Hub is an exclusive space designed for executives from all sectors to stay ahead in the rapidly evolving AI landscape. It serves as a central repository for high-value resources, including industry reports, expert insights, cutting-edge research, and best practices across 12+ sectors. Whether you’re looking for strategic frameworks, implementation guides, or real-world AI success stories, this hub is your go-to destination for staying informed and making data-driven decisions.

Beyond resources, The CAIO Hub is a dynamic community, providing direct access to program updates, key announcements, and curated discussions. It’s where AI leaders can connect, share knowledge, and gain exclusive access to private content that isn’t available elsewhere. From emerging AI trends to regulatory shifts and transformative use cases, this hub ensures you’re always at the forefront of AI innovation.

For advertising inquiries, feedback, or suggestions, please reach out to us at [email protected].