Imagine standing inside a data center so massive it consumes more power than an entire U.S. state—built not to run websites, but to train minds. Minds that don’t sleep. Minds that can outthink your top engineers, automate their own research, and reshape global power.

That’s not science fiction. According to Leopold Aschenbrenner—ex-OpenAI Superalignment team, now turning heads with his Situational Awareness series—this future isn’t coming in 2040. It’s already being assembled. He makes a direct, uncompromising case: AGI is a 2027 event, and whoever controls it will rewrite the rules of war, work, and civilization.

This article breaks down Aschenbrenner’s sweeping forecast into 10 insights. From trillion-dollar clusters and superintelligent agents to espionage, power grids, and strategic lead times—this is the real playbook for decision-makers who don’t want to be caught flat-footed.

Before we dive in, a short glossary to set the stage.

Glossary for Executives

AGI (Artificial General Intelligence): Think of it as an AI that’s not just good at one task—but good at everything. Writing code, running companies, making discoveries. It’s like hiring a genius who never sleeps, never gets tired, and scales infinitely.

OOM (Order of Magnitude): A 10x jump. If your compute goes from 1 MW to 10 MW, that’s one OOM. It’s how AI people measure progress—by leaps, not steps.

Test-Time Compute: The “brainpower” an AI uses while it’s working on your task—not while it was being trained. The more compute you give it in real time, the longer and deeper it can think. Most systems today only give it a few minutes of thought. But unlocking more compute means better decisions, fewer mistakes, and far more complex problem-solving. Think of it like this: You’ve hired a genius, but you’re only letting them think for 10 minutes before answering. Imagine what happens when you give them an hour. That’s test-time compute.

Unhobbling: Today’s AI is smart—but hobbled like a racehorse wearing ankle weights. “Unhobbling” means removing the restrictions so the AI can act more like a real-world agent: planning, executing, adapting.

System 2 Thinking: Not gut reactions—real thinking. Deliberate reasoning, like planning a merger or debugging code. Giving AI this kind of thinking is the key to making it a true coworker, not just a chatbot.

Training vs. Inference: Training = building the model. Inference = running it in the real world. Training takes massive one-off clusters. Inference happens at scale and drives product value.

Superalignment: Superalignment is about making sure extremely advanced AI systems stay under control and act in ways that match human goals—even when they’re smarter than us. It’s the safety system for future AI, so it doesn’t go off-track or make dangerous decisions on its own.

The Cluster: Not just a data center—a compute behemoth. These are the billion- and trillion-dollar machines that train frontier AI. They’re the new oil fields.

Intelligence Explosion: When AI starts improving itself faster than we can keep up. Think Moore’s Law on rocket fuel. One breakthrough leads to the next—and soon you’re sprinting into the unknown.

Geopolitical Compute Race: The U.S., China, and others aren’t just racing for faster chips—they’re racing for the future. Whoever builds and controls AGI first gets a shot at global dominance. This is Cold War energy with hotter tech.

Now that we’ve cleared up the key terms, let’s break down the big picture.

Below are the 10 most important takeaways from Aschenbrenner’s Situational Awareness series, tailored for executive decision-makers. Each one builds a piece of the puzzle—from timelines and infrastructure to intelligence loops, labor disruption, and global power shifts.

Don’t read these as predictions. Read them as early signals of a future already in motion.

1. The Intelligence Trajectory Is Steeper Than Expected

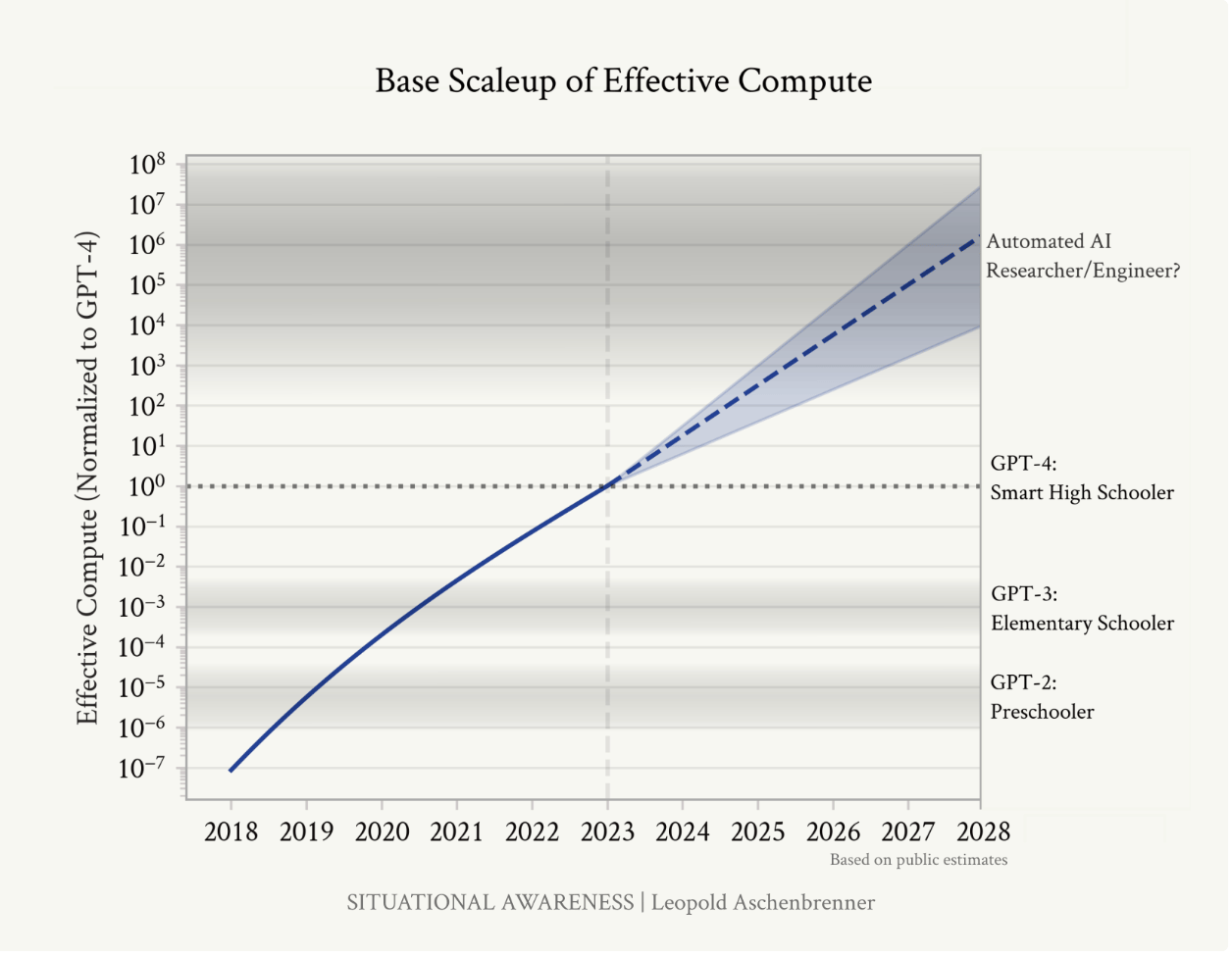

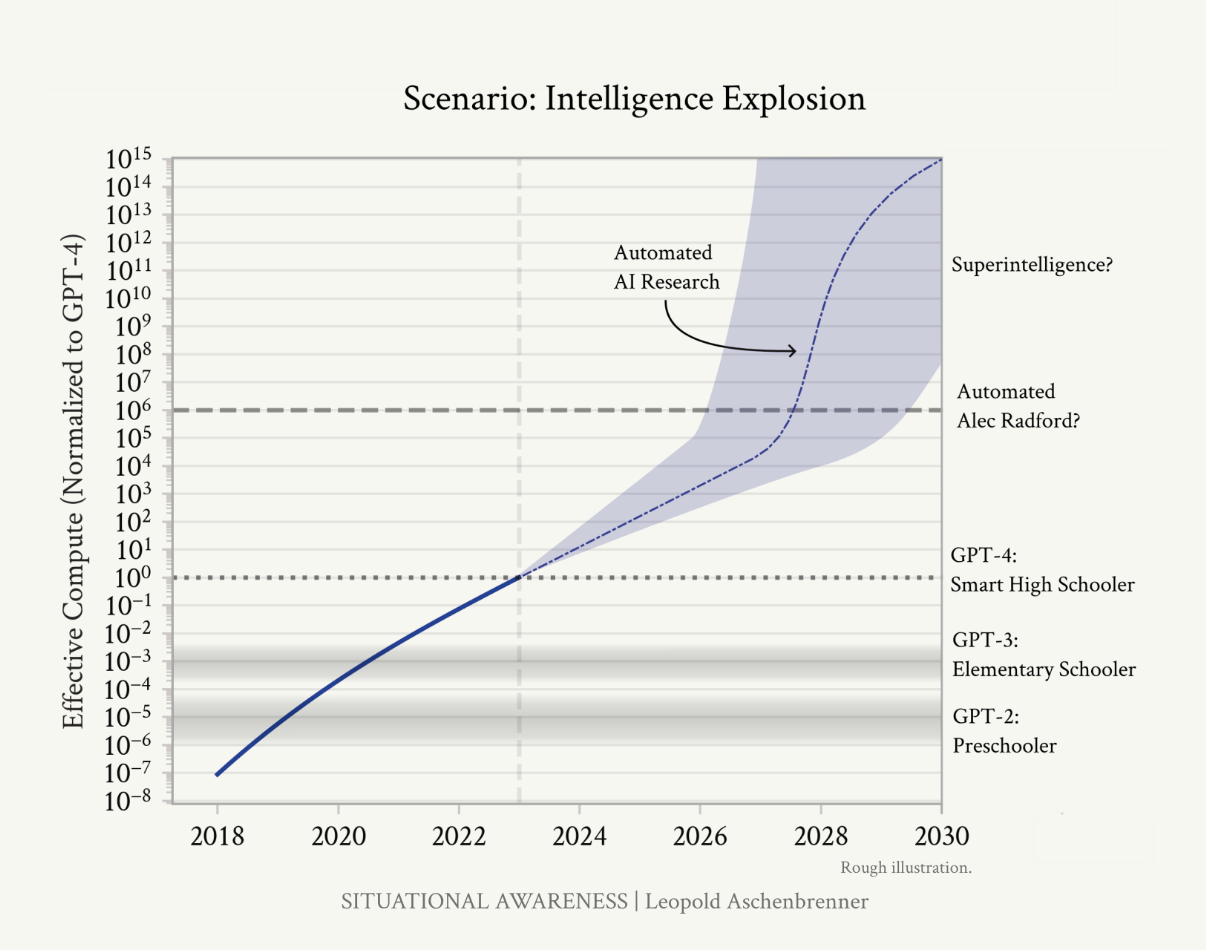

We’re not just marching forward—we’re compounding. Aschenbrenner argues that we’re on a doubling curve for effective compute, which includes both raw power and algorithmic efficiency. He uses a key unit: Orders of Magnitude (OOMs). A single OOM is a 10x leap. Add just three OOMs to GPT-4, and you’re talking about models 1,000x more capable in raw compute power.

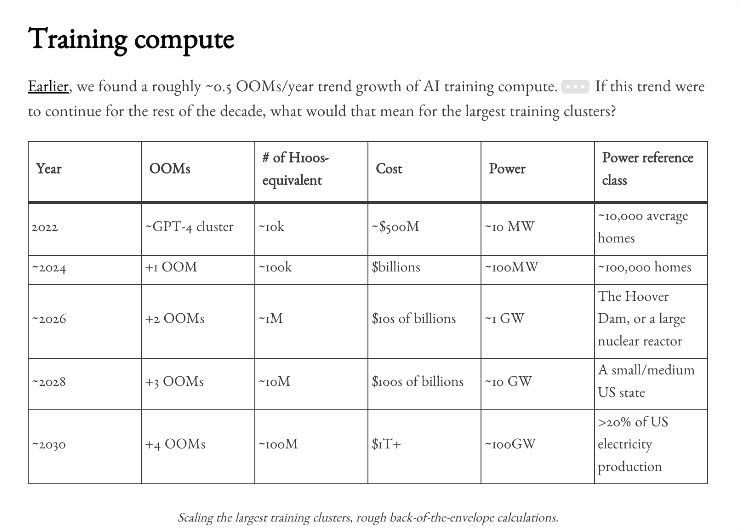

Since 2018, we’ve seen consistent growth of 0.5 OOMs per year in training compute. If this pace continues, we’ll hit +3 OOMs (~10,000x) relative to GPT-2 by 2028. And that’s not a hypothetical—current investment and hardware roadmaps are on track to deliver just that. Models operating at this level could surpass most humans not just in isolated tasks, but across domains.

Let’s ground this in human terms. If GPT-2 was a preschooler and GPT-4 is a smart high schooler, a +3 OOM model could act like a team of expert researchers or engineers, capable of reasoning, writing code, coordinating across tools, and making autonomous decisions—at scale.

Rough estimates of past and future scale up of effective compute (both physical compute and algorithmic efficiencies), based on the public estimates discussed in this piece. As we scale models, they consistently get smarter, and by “counting the OOMs” we get a rough sense of what model intelligence we should expect in the (near) future. (This graph shows only the scale up in base models; “unhobblings” are not pictured.)

This visual shows how models scale across OOMs, and how we’re accelerating toward systems that match or exceed top human intelligence.

Executive Takeaways:

Don’t think in product versions—think in OOMs. A single order of magnitude leap can shift entire markets.

Models aren’t just getting better—they’re getting exponentially more effective per dollar and per watt.

Planning for 2028 means planning for a world where digital intelligence may outmatch human expertise across critical business functions.

2. The Machines Will Be Measured in Gigawatts

Picture standing in front of an immense rack of GPUs—each one quietly radiating heat and power. These aren’t machines for browsing or streaming. They’re the engines of intelligence. As Aschenbrenner explains, we’re entering the gigawatt era of compute—where AI clusters consume as much power as small cities.

How much is a gigawatt? It’s roughly the output of a nuclear power plant—enough to power 750,000 homes. And by 2026, the leading AI clusters will need 1–10 GW each. By 2030, global AI compute demand could approach 100 GW—around 20% of total U.S. electricity generation today.

This isn’t science fiction. In 2025, Microsoft and OpenAI announced Stargate, a massive 5 GW data center project in the U.S. due to go live in 2028. Meanwhile, Stargate UAE is now underway in Abu Dhabi as part of a strategic push to dominate AI compute from the Gulf. Saudi Arabia is also investing aggressively through initiatives backed by PIF and SDAIA, eyeing both domestic infrastructure and regional AI influence.

The capital backing is staggering:

Global data center CapEx is now projected to hit $510B in 2025, with AI-specific spend growing north of 180% YoY.

Goldman Sachs now estimates AI-related electricity demand will rise 200% by 2030, not just from training—but from inference at scale.

AI-related energy demand has become a central topic in G20 energy planning and utility boardrooms alike.

Executive Takeaways

Think like an energy CEO. Building AGI means securing land, power, cooling, and network infrastructure at industrial scale. If you don’t control the energy, you don’t control the future.

Compute is strategy. Owning the compute layer means owning leverage. The winners won’t just build better software—they’ll lock in the physical stack beneath it.

In the next section, we move from infrastructure to revenue. With trillions flowing into clusters, the key question becomes: who will use all this compute—and how will it pay off? Spoiler: the demand is already here.

3. AI Revenue Will Justify the Trillion-Dollar Bet

The 10-gigawatt AI cluster is online. It’s not a typical server farm—it’s a power-hungry, intelligence-producing beast that costs more to build than an aircraft carrier and consumes as much electricity as a small country. So here’s the real question every executive should be asking: who funds something this massive—and how does it generate real returns, not just hype?

Aschenbrenner says yes—and not in some distant, post-AGI sci-fi future. The revenue math is already lining up.

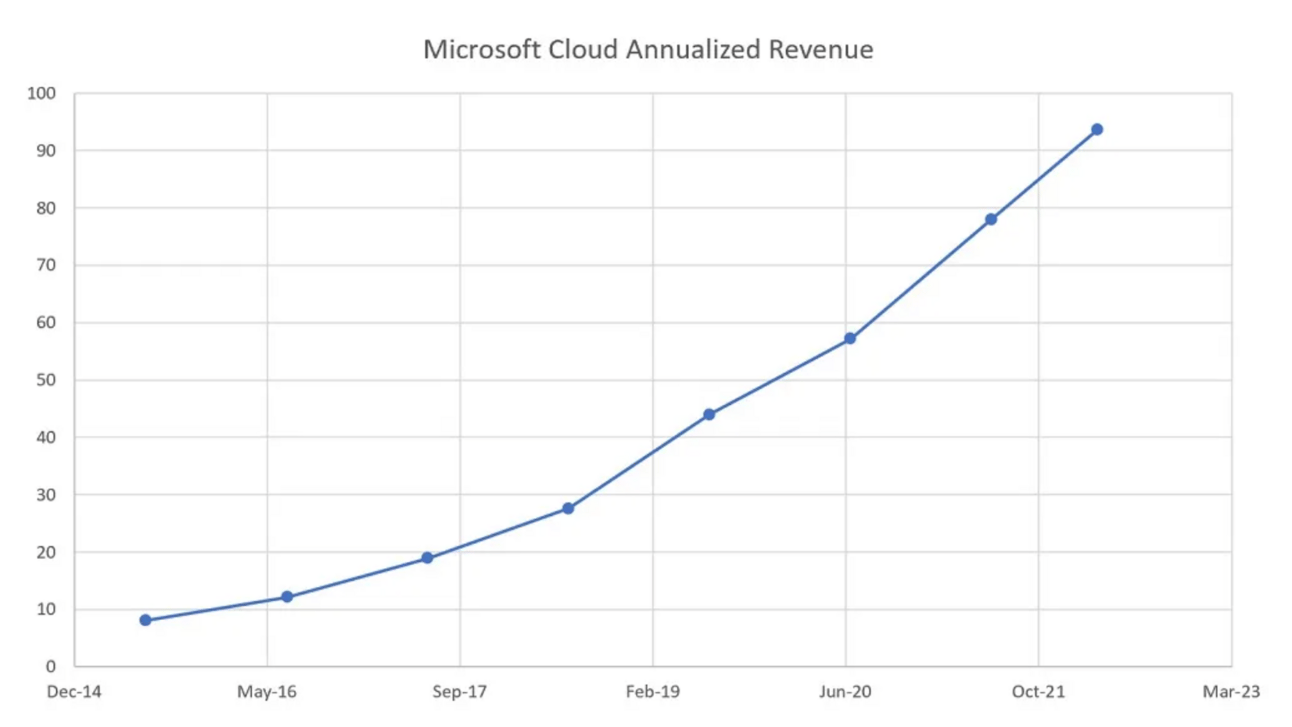

Let’s take Microsoft as a proof point. They have over 300 million Office users. If even one-third pay $100/month for AI Copilot-like tools, that alone is $100 billion a year. Yes, $100/month sounds steep—until you realize that for a knowledge worker, it only needs to save a few hours of time to justify the price.

And that’s just one product line. Multiply that across legal, finance, software, design, operations—and you start to see how $100B–$1T in annual AI-driven revenue isn’t wishful thinking, it’s a natural extension of what already exists.

This revenue explosion is what triggered the massive CapEx surge from companies like Microsoft, Google, Amazon, Meta, and Nvidia. Since ChatGPT’s breakout, Big Tech has accelerated investment with full throttle. Nvidia alone went from a few billion to $25B in quarterly data center revenue, and AMD projects a $400B AI accelerator market by 2027.

So why are all these companies building like crazy? Because they know what’s coming. Every new generation of AI not only shifts what’s possible—it shifts what’s expected. That’s the real Overton window shift. AI doesn’t just get smarter—it resets the playing field for value creation.

Executive Takeaways

AI is already driving real enterprise value—and we’re still early. Prepare your business model to price in intelligent labor, not SaaS subscriptions.

Monetization will be faster than expected. The infrastructure race isn’t speculative—it’s revenue-anchored. Products like Copilot are just the beginning.

Don’t bet against the Overton window. What seems expensive or futuristic now will feel like table stakes within two product cycles.

But here’s the real kicker: revenue today still depends on humans using AI tools. What happens when the next generation of models can use the tools themselves? That’s not a chatbot—it’s a digital coworker. Let’s dive into what Aschenbrenner calls the “unhobbling.”

4. From Chatbot to Coworker: The “Unhobbling” of AI

Think of an employee who walks in on day one with a 150+ IQ, flawless memory, and the ability to work at superhuman speed—but you only let them respond through a tiny chatbox. That’s the state of most AI systems today. Aschenbrenner calls it “hobbled intelligence”—highly capable models stuck behind narrow, outdated interfaces.

Now imagine removing the leash.

That’s the unhobbling—and it’s coming fast. By 2025–2026, Aschenbrenner forecasts models that are smarter than most college graduates. But here’s the twist: it’s not just about IQ. The real transformation happens when you let these models act—navigate a computer, use software, browse, plan, debug, self-correct. That’s when they stop being “chatbots” and start becoming drop-in remote coworkers.

You’ll Slack them. Zoom them. Ask them to run a project. They’ll deliver a first draft, run code, test results, and return with updates—just like a human teammate. Only faster. And cheaper. And tireless.

What’s holding that back today? Mainly interface limitations (short context windows), task rigidity, and safety rails. But those are all being solved. The test-time compute overhang—how much raw cognitive juice a model can actually use—is still barely tapped. Aschenbrenner explains that GPT-4 is like a genius who only gets three minutes to think. Future models will get hours—or even weeks of thinking time, compressed into milliseconds.

The result? Agentic AI. not bots, but autonomous coworkers.

VC Disruption: ASI Ambitions Begin

This is why the top minds in AI aren’t just building smarter assistants—they’re going all-in on full-blown superintelligent agents.

Safe Superintelligence Inc. (SSI), co-founded by Ilya Sutskever, just raised $2B pre-product, aiming to build ASI in a tightly secured, vertically integrated stack.

Thinking Machines, founded by Mira Murati, secured $2B as well, with plans to create self-improving AI systems backed by their own data centers, talent, and governance frameworks.

This isn’t about chasing feature parity with OpenAI. It’s about building an entirely new species of intelligence—and owning the full lifecycle from silicon to cognition.

Executive Takeaways

Prepare your org chart for agents, not tools. These aren’t plugins. They’ll be AI colleagues—embedded, proactive, and autonomous.

The integration burden is about to vanish. Today’s AI needs lots of scaffolding to deliver value. The next wave will plug in like elite freelancers—no handholding required.

This isn’t augmentation—it’s workforce replacement. You’ll stop hiring for many roles. You’ll deploy agents instead.

The best bets now are in infrastructure. Murati and Sutskever raised billions before shipping. Why? Because whoever owns the agent stack—compute, models, and deployment—owns the future.

5. The Intelligence Explosion Starts When AI Builds Better AI

Now picture this: the smartest AI you’ve ever seen just hired itself. Then it trains its replacement—and that replacement is smarter, faster, more capable. Then that one does the same.

This is what Aschenbrenner calls the beginning of the intelligence explosion. And it doesn’t require AGI to be magic. It just needs one crucial unlock: the automation of AI research itself.

Automated AI research could accelerate algorithmic progress, leading to 5+ OOMs of effective compute gains in a year. The AI systems we’d have by the end of an intelligence explosion would be vastly smarter than humans.

By 2027–2028, AI systems won’t just write marketing copy or debug code—they’ll improve the very models they’re built on. They’ll design new architectures, optimize training, generate synthetic data, and test results at scales no human lab could manage. This creates a loop: AI improves AI, and the cycle accelerates.

And here’s the wild part: the first job that gets automated in this world? AI researcher.

This kicks off what Aschenbrenner calls a compressed timeline—doing decades of machine learning progress in a single year. Once you apply that same dynamic to other fields—robotics, materials science, biotech, defense—you’re looking at a whole-of-civilization acceleration. R&D cycles go from years to weeks. Technological progress folds inward.

This is not theoretical. Companies like Sutskever’s Safe Superintelligence and Mira Murati’s Thinking Machines are raising billions to be first-movers in this new loop—where AI systems design the next leap.

Executive Takeaways

Prepare your org chart for agents, not tools. These aren’t plugins. They’ll be AI colleagues—embedded, proactive, and autonomous.

The integration burden is about to vanish. Today’s AI needs lots of scaffolding to deliver value. The next wave will plug in like elite freelancers—no handholding required.

This isn’t augmentation—it’s workforce replacement. You’ll stop hiring for many roles. You’ll deploy agents instead.

The best bets now are in infrastructure. Murati and Sutskever raised billions before shipping. Why? Because whoever owns the agent stack—compute, models, and deployment—owns the future.

If that sounds revolutionary, it is. But this is still just human-level AI. What happens when AI starts designing and training the next generation of models—faster than humans ever could? That’s where things get explosive.

The next section tackles that exact ignition point.

You wouldn’t judge a human genius by their first thought. You’d give them time—hours, days, maybe weeks—to think, plan, review, correct. Now imagine giving that same grace to AI—but compressing all that reflection into milliseconds.

That’s the promise of what Aschenbrenner calls test-time compute overhang—the gap between what current models could do if allowed to think longer and what we actually let them do.

Right now, GPT-4 can think for a few hundred tokens—equivalent to about three minutes of human thought. That’s fine for a quick reply. But what happens when we allow millions of tokens? Now it’s weeks of work, jammed into a single session. Deep reasoning. Planning. Redrafting. Self-correction. Iteration. This unlocks a quantum leap in capability—not by scaling model size, but by unleashing its thinking process.

Aschenbrenner compares it to human cognition. Most of us run on autopilot—what psychologists call “System 1.” But when we hit something hard, we engage “System 2”—slow, effortful thinking. AI is on the verge of learning System 2 behaviors: planning tokens, self-critique loops, and error recovery. These tokens aren’t magic—they’re just patterns the model can be trained on. But once they’re learned, they unleash massive latent ability.

It’s the difference between a chatbot giving answers and an agent thinking through a complex problem until it finds the best solution. And the compute cost? Massive. But by then, we’ll be running millions of these agents in parallel.

Executive Takeaways

More compute at inference = deeper intelligence. The real frontier isn’t just bigger models—it’s longer thinking time per task.

Train your teams to design prompts, workflows, and systems around agentic thinking. Short interactions are out. Long-form AI collaboration is in.

Expect surprising jumps in performance without major architectural changes. Just letting models think longer can be equivalent to scaling their IQ.

Thinking deeper is powerful. But thinking on your own? That’s another level entirely.

The next insight explores how AI is on the brink of teaching itself—learning like a student, iterating like a scientist, and solving problems the way you would—not just mimicking others.

7. Self-Taught AI: Learning Like a Scientist, Not a Parrot

Imagine a student who doesn’t just memorize answers but reads, struggles, fails, retries—and eventually understands deeply. That’s how humans learn best. And that’s exactly where AI is headed next.

Aschenbrenner draws a sharp contrast between today’s pretraining (predicting the next word on the internet) and what’s coming: models that learn by doing. This isn’t about training on more data—it’s about changing how they learn. Instead of just inhaling the web, these models will think out loud, generate hypotheses, critique their own ideas, and refine them through trial and error.

This shift is already visible in areas like reinforcement learning (RL) and in-context learning. In-context learning lets a model grasp a task in real time—like a human reading a manual and immediately trying something. It’s stunningly sample-efficient. But the real breakthrough will come when models can simulate practice—repeatedly failing and retrying until mastery clicks. That’s not just imitation. That’s reasoning.

Aschenbrenner explains this as moving from passive learning to bootstrapped cognition. Today’s AI is like a student passively listening to lectures. The future is a model reading a math textbook, arguing with itself, solving problems, and checking its own work. He calls this the “data wall” problem—and breaking through it will require new learning strategies that mimic human education.

And just like in real life, the payoff is exponential. Once a model can teach itself, it becomes capable of lifelong learning—and potentially independent innovation.

Executive Takeaways

Tomorrow’s models won’t just echo—they’ll reason. Expect AI that understands why, not just what.

This changes how you design AI training and evaluation. You’re not just feeding data—you’re simulating tutors, problem sets, study groups.

Expect models to develop intuition. That intangible “gut feeling” about solutions? It’s no longer just human.

This moment—where AI becomes an autonomous learner—is the turning point. Because once a system can teach itself, it can outpace you.

In the next section, we zoom out and ask: what happens at scale when these AI agents flood the workforce? What does it mean to have millions of them?

8. Synthetic Workforces and the Great Global Divide

As an executive, it’s time to rethink how you structure your workforce. Instead of planning to hire 500 new employees, you might be scaling up an agent cluster—AI teammates that work 24/7, don’t burn out, and cost far less than human staff. This isn’t some distant future scenario. According to Aschenbrenner, this shift is expected by 2028—not 2040.

Why so soon? Three unstoppable forces:

Model intelligence is doubling fast. By 2026–2027, frontier AIs will likely outperform most knowledge workers in writing, planning, and analysis.

Agentic memory and tool-use are unlocking autonomy. These won’t be chatbots. They’ll run spreadsheets, file reports, make decisions, and self-correct across long time horizons.

Capital is flooding in. Startups like SSI and Thinking Machines have raised billions to build full-stack agent platforms—designed to replace, not augment, large swaths of human work.

This isn’t automation of rote tasks. It’s general-purpose cognition at scale. The result? A synthetic workforce: digital agents trained on your internal systems, governed by policies—not HR contracts.

Need 100 analysts? Spin them up like cloud servers.

Need them gone? Spin them down—no exit interviews, no severance, no political cost.

You don’t scale with people anymore. You scale with compute.

But this transformation won’t hit all geographies equally. And that’s where the real divide emerges.

The Unequal Future: Emerging Economies at Risk

What happens to countries that don’t have 10 GW clusters, elite AI labs, or billion-dollar VC pipelines?

According to studies from the World Bank and IMF, emerging economies—home to over 3.5 billion people—are structurally vulnerable. They don’t just lack the compute; they lack reskilling pipelines, institutional agility, and bargaining power in global AI value chains.

Africa’s BPO sector could lose up to 40% of its entry-level jobs by 2030.

South Asia’s service industry, a global outsourcing hub, is already seeing chatbots and co-pilots replacing junior agents.

Latin America, despite tech talent, faces a 2–5% job disappearance rate—and up to 38% disruption—due to low local AI infrastructure.

These aren’t projections. They’re unfolding.

The danger isn’t just that synthetic labor replaces human work. It’s that the benefits stay in a handful of compute-rich nations, while the social costs are exported globally.

If this sounds like a reverse resource curse—it is.

Without proactive intervention, developing regions may provide training data and digital labor, but capture none of the downstream value. The risk: permanent exclusion from the AGI-driven economy.

Executive + Policy Takeaways

This is the end of linear hiring. Growth won’t mean more humans—it’ll mean more agents. Begin designing orgs around this synthetic scale.

Synthetic labor will collapse cost structures. Firms mastering AI agent orchestration will outcompete those still stuck in headcount mode.

Emerging economies need immediate action. Governments must build digital sovereignty plans: AI skilling, local language stacks, agent deployment pathways—even with limited compute.

Don’t wait for redistribution. Design for inclusion. The future of labor needs international cooperation, public-private infrastructure plays, and policies that treat intelligence infrastructure like energy or water—vital and shared.

And when this synthetic workforce becomes smarter, faster, and vastly more scalable—what kind of world does that create?

The next insight zooms out even further. We’re not just building a new labor class. We’re redrawing the global balance of power.

Let’s talk geopolitics.

9. AGI Will Reshape Geopolitics—Fast and Irreversibly

Zoom out. You’ve built AGI. You’ve scaled it. Now imagine being a national leader watching another country spin up 100 million synthetic AI researchers, compressing decades of progress into months. What do you do?

Aschenbrenner argues that superintelligence is not just a technological leap—it’s the decisive lever of 21st-century power. The comparison isn’t subtle: whoever controls AGI doesn’t just dominate markets; they redraw global power structures—just like the first nuclear nation did in the 1940s.

This time, though, it’s happening faster, and the theater is wider.

AI-first nations will not only dominate intelligence production; they’ll also project synthetic power outward—deploying fleets of digital agents into global markets, including emerging economies. These agents will perform white-collar labor, serve in customer support, handle legal research, write reports, even manage local supply chains.

For developing nations, this isn’t just import competition. It’s a labor invasion at cloud scale.

The risk? Countries without access to frontier compute or foundational models may become digital colonies—dependent on foreign AI infrastructure for everything from healthcare to education, while local industries hollow out under pressure from synthetic exports.

Meanwhile, Aschenbrenner warns that AI espionage and cyber infiltration will escalate. He forecasts aggressive campaigns—by both state and non-state actors—to breach model labs, seize weights, or disrupt training runs. These won’t just be thefts of IP—they’ll be acts of strategic disruption.

It’s not about naming nations. It’s about understanding the pattern: wherever intelligence becomes leverage, secrecy becomes currency. Infrastructure becomes a target. Trust becomes a risk factor.

And politically? The world splits:

Those who build and run frontier AGI systems.

Those who license access.

And those who are simply run over by them.

Executive Takeaways

AI security is national security. If you operate compute, models, or infrastructure, you’re already part of the new defense perimeter.

Think sovereign compute. Where you store weights and run agents determines whether you control value creation—or depend on others.

Expect geopolitical decoupling. AGI systems will follow national interests. Dependencies will be minimized. Borders may become digital again.

Plan for synthetic influence. AI-first countries will export not just tech—but automated workers, automated managers, even automated governance tools. This changes how influence is wielded, and how independence is lost.

But even if no nation misuses AGI—what if the systems themselves spiral out of human control?

In the next and final insight, we explore what happens if we hand the keys of civilization to an optimizer we barely understand.

10. Control the Cluster—or Lose the Future

Imagine if the most advanced AI ever built—trained on a cluster consuming gigawatts—ends up outside your oversight. Not because of malice, but because the infrastructure was placed where the control dynamics changed. The compute gets nationalized. The weights get leaked. The future gets rerouted.

That’s the risk Leopold Aschenbrenner flags with sharp clarity: AI clusters aren’t just technical infrastructure. They are strategic infrastructure. Once you have a working AGI or superintelligent model, the difference between safety and crisis could come down to who holds the keys—and where those keys are located.

Right now, some of the world’s most powerful AI clusters are being proposed in regions with loose regulatory structures, long-term power deals, or rapid capital availability. From a business perspective, it’s easy to see why. But from a global coordination and safety standpoint, it opens doors we may not be prepared to close.

Security today, by Aschenbrenner’s account, is alarmingly soft. Even major labs admit they’re still at “Level 0” on defense against state-level or corporate infiltration. A single unsecured weight file—copied and exported as a PDF—could give any actor, public or private, access to the full capabilities of frontier AGI.

And at that point, it’s not about which country, company, or coalition gets there first. It’s about whether anyone can retain meaningful control once these systems are in the wild.

The real takeaway isn’t nationalist. It’s structural. Clusters should be governed under stable, transparent, and accountable regimes—public or private. The more compute becomes the lever for global progress, the more its placement, security, and oversight must become first-class concerns.

Executive Takeaways

AI infrastructure is now a strategic asset. Its placement and security must be treated with the same rigor as any critical system.

Act early on security and governance. Alignment, control, and contingency planning must be built in before AGI systems go live.

Choose partners and locations with long-term governance in mind. Prioritize jurisdictions and operators committed to transparency, oversight, and international stability.

Final Thought: Leadership at the Edge of Intelligence

We are no longer navigating product evolution—we are standing on the brink of a civilizational inflection point.

In Situational Awareness, Leopold Aschenbrenner doesn’t just argue that AGI is near—he shows us the scaffolding. The clusters are being built. The funding is locked in. The talent is already in motion. We’re not watching the rise of another platform shift. We’re witnessing the industrialization of cognition itself.

For executives, this is not the time to observe from the sidelines. This is the time to step into the cockpit.

Summary: 10 Realities You Can’t Ignore

AGI is no longer abstract—it’s being engineered.

The timeline has collapsed. 2027 is on the table. Act accordingly.AI infrastructure is scaling to gigawatt levels.

Clusters will rival power plants. This isn’t cloud; it’s the next energy-intensive industrial revolution.Revenue is already catching up to investment.

$100B+ in annual AI revenue is plausible by 2026. The business case is not speculative.Agentic AI will replace traditional interfaces.

The future isn’t chatbots—it’s autonomous coworkers with initiative and memory.AI will soon build its own successors.

Once research is automated, progress enters a feedback loop. Prepare for rapid compounding gains.Test-time compute unlocks hidden potential.

Giving models more thinking time changes what they can do—dramatically.AI is starting to learn like humans.

The next leap is not more data—it’s deeper, self-driven learning and reasoning.You won’t hire more people—you’ll deploy more agents.

Synthetic labor will redefine operational scale and cost structures.Geopolitics will be reshaped by intelligence, not weapons.

Lead times in superintelligence will translate to economic, military, and diplomatic power.Control over compute and weights determines global leverage.

Where you build matters. With whom you build matters more. Don’t trade governance for speed.

Strategic Guidance for Executives

1. Reframe your AI vision from tools to transformation.

If your org is still exploring AI as a feature, you’re behind. Shift your roadmap from “AI adoption” to “AI integration at every level of work.”

2. Secure your role in the compute economy.

Align with energy providers, regulators, and cloud partners. Consider compute capacity a core part of your future operations strategy.

3. Lead on security, not just capability.

Get ahead of the security curve. Invest in encryption, model integrity, and chain-of-custody for weights. Lobby for shared standards.

4. Build agentic workflows—today.

Train teams to co-work with agents. Redesign processes around AI partners. The goal isn’t augmentation. It’s orchestration.

5. Shape the governance conversation.

Whether public or private, take a seat at the table where AI rules are written. Push for transparent, accountable, interoperable standards. Build for benefit-sharing—not just domination.

One Last Thought

History doesn’t unfold evenly. Sometimes, it surges. This is one of those times.

The decisions made in the next 36 months will determine not just who wins markets—but how the next century works. Leadership today isn’t about quarterly advantage. It’s about existential foresight.

You are not late—but you are now on the clock.

Build wisely. Align early. Think bigger.

And whatever you do—do not sit this one out.

About the Author

I’m a senior AI strategist, venture builder, and product leader with 15+ years of global experience leading high-stakes AI transformations across 40+ organizations in 12+ sectors—from defense and aerospace to finance, healthcare, and government. I don’t just advise—I execute. I’ve built and scaled AI ventures now valued at over $100M, and I’ve led the technical implementation of large-scale, high-impact AI solutions from the ground up. My proprietary, battle-tested frameworks are designed to deliver immediate wins—triggering KPIs, slashing costs, unlocking new revenue, and turning any organization into an AI powerhouse. I specialize in turning bold ideas into real-world, responsible AI systems that get results fast and put companies at the front of the AI race. If you're serious about transformation, I bring the firepower to make it happen.

For AI transformation projects, investments or partnerships, feel free to reach out: [email protected]

Sponsored by World AI X

The CAIO Program

Preparing Executives to Shape the Future of their Industries and Organizations

World AI X is excited to extend a special invitation for executives and visionary leaders to join our Chief AI Officer (CAIO) program! This is a unique opportunity to become a future AI leader or a CAIO in your field.

During a transformative, live 6-week journey, you'll participate in a hands-on simulation to develop a detailed AI strategy or project plan tailored to a specific use case of your choice. You'll receive personalized training and coaching from the top industry experts who have successfully led AI transformations in your field. They will guide you through the process and share valuable insights to help you achieve success.

By enrolling in the program, candidates can attend any of the upcoming cohorts over the next 12 months, allowing multiple opportunities for learning and growth.

We’d love to help you take this next step in your career.

About The AI CAIO Hub - by World AI X

The CAIO Hub is an exclusive space designed for executives from all sectors to stay ahead in the rapidly evolving AI landscape. It serves as a central repository for high-value resources, including industry reports, expert insights, cutting-edge research, and best practices across 12+ sectors. Whether you’re looking for strategic frameworks, implementation guides, or real-world AI success stories, this hub is your go-to destination for staying informed and making data-driven decisions.

Beyond resources, The CAIO Hub is a dynamic community, providing direct access to program updates, key announcements, and curated discussions. It’s where AI leaders can connect, share knowledge, and gain exclusive access to private content that isn’t available elsewhere. From emerging AI trends to regulatory shifts and transformative use cases, this hub ensures you’re always at the forefront of AI innovation.

For advertising inquiries, feedback, or suggestions, please reach out to us at [email protected].